What Zero Downtime Deployment Really Means in Modern Software Delivery

Zero downtime deployment is often presented as a technical ideal. In practice, it is a delivery discipline focused on protecting user experience while software changes are released. At its core, zero downtime deployment means shipping new versions of an application without interrupting availability, performance, or data integrity for active users.

This definition matters because many teams equate zero downtime with “fast deployments” or “no visible outages”. Those are outcomes, not mechanisms. Zero downtime deployment is not a single tool or platform feature. It is a coordinated approach across architecture, release process, infrastructure, and operational decision-making.

In modern software delivery, especially for SaaS products and business-critical web applications, downtime is rarely acceptable. Users expect services to remain accessible while features evolve, bugs are fixed, and security patches are applied. This expectation applies whether the system serves a few thousand users or supports enterprise-scale workloads.

From an engineering perspective, zero downtime deployment works by ensuring that at no point are all users dependent on a single, unavailable version of the system. Traffic is gradually shifted, duplicated, or routed in a way that allows old and new versions to coexist safely. This can involve parallel environments, backward-compatible changes, and controlled rollout mechanisms.

Importantly, zero downtime deployment is not binary. Very few systems operate with absolute zero interruption under all conditions. The real goal is to reduce user-impacting downtime to a level that is operationally negligible and predictable. This distinction helps teams move away from unrealistic promises and towards measurable delivery reliability.

The business relevance is clear. Downtime directly affects revenue, trust, and brand perception. For subscription platforms, even short outages can trigger customer churn. For internal systems, downtime slows teams and increases operational costs. Zero downtime deployment aligns software delivery with business continuity, rather than treating releases as disruptive events.

It is also worth separating zero downtime deployment from related but distinct concepts. Continuous delivery enables frequent releases, but does not guarantee zero downtime. High availability keeps systems resilient to failures, but does not automatically protect against risky deployments. Zero downtime deployment sits at the intersection, ensuring that change itself does not become a source of instability.

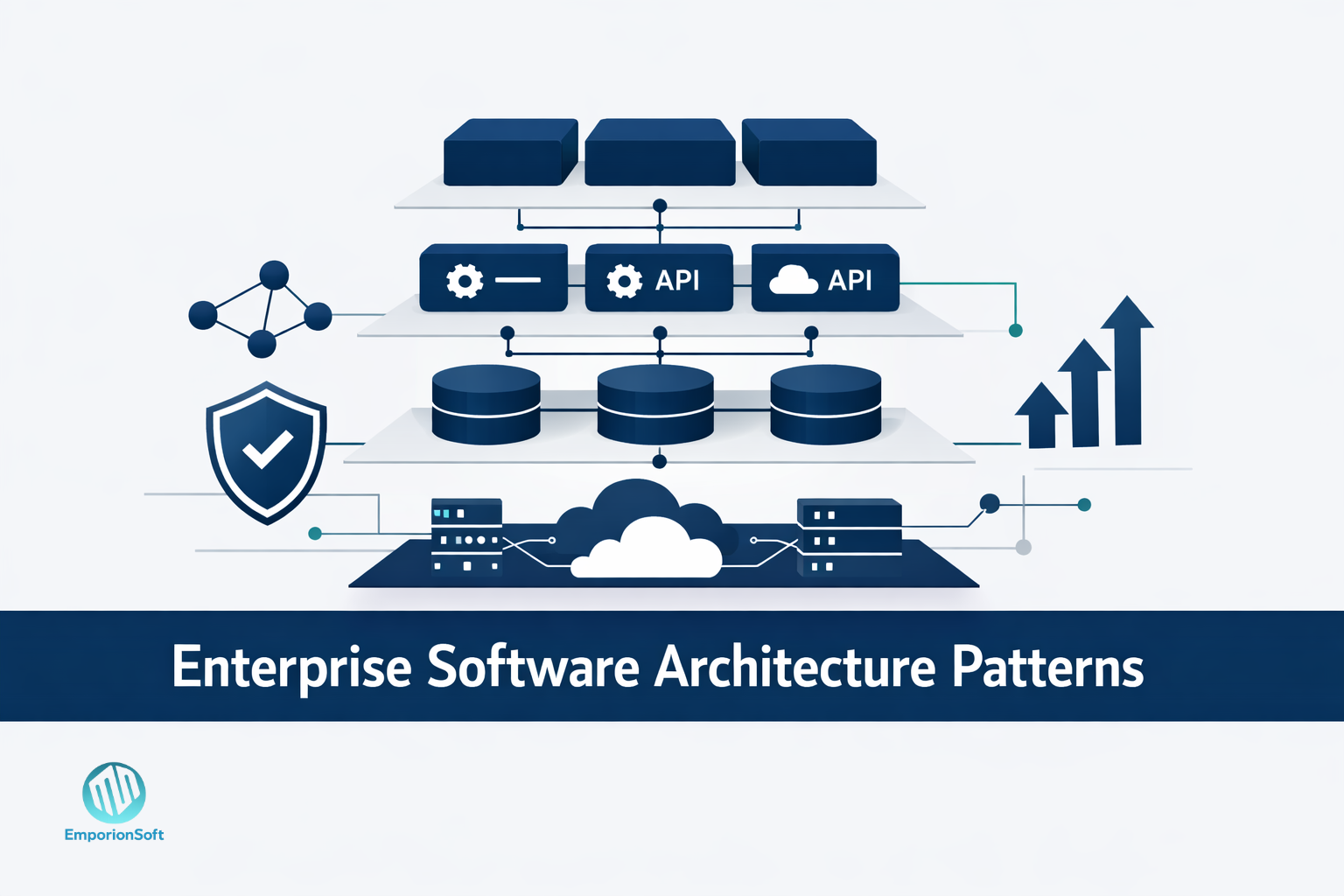

Modern delivery environments make this approach more achievable, but also more complex. Cloud platforms, container orchestration, and managed infrastructure reduce some operational burdens. At the same time, distributed systems introduce new failure modes that require careful release design. Zero downtime deployment therefore depends as much on engineering judgement as it does on tooling.

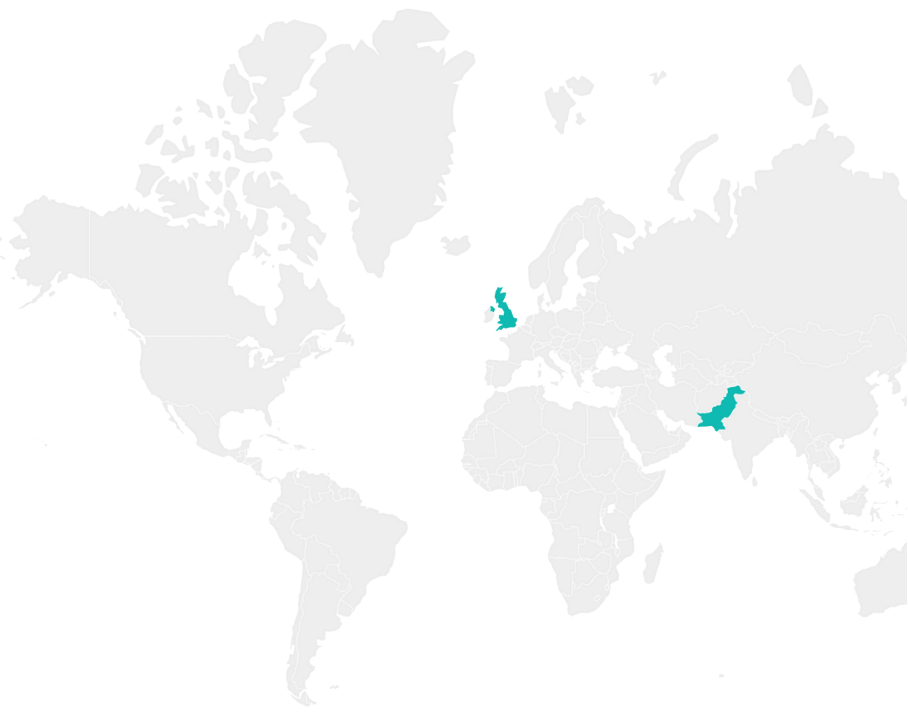

For organisations building or scaling digital products, this topic fits naturally within broader delivery and architecture conversations. It connects closely with service design, operational maturity, and long-term platform strategy, areas often explored in human-centred development engagements such as those outlined on the EmporionSoft services overview and within their broader software delivery insights.

Understanding what zero downtime deployment truly means sets the foundation for the rest of the discussion. Before examining strategies, tools, or pipelines, teams need a shared definition grounded in real-world constraints rather than aspirational slogans. That clarity is what allows zero downtime deployment to move from theory into repeatable practice.

Why Downtime Is Still a Business Risk for Growing Digital Products

Downtime is often discussed as a technical inconvenience. For growing digital products, it is more accurately a business risk that compounds over time. As software becomes more central to revenue generation, customer operations, and internal decision-making, even brief service interruptions can have outsized consequences.

For startups and SMEs, downtime directly undermines credibility. Early-stage products rely heavily on trust, especially when competing against larger, more established platforms. Users may tolerate missing features, but they are far less forgiving of systems that are unreliable or unavailable. In this context, zero downtime software deployment is not about perfection. It is about signalling operational maturity earlier than scale might suggest.

The financial impact is often underestimated. Lost transactions during an outage are only the visible cost. Less obvious are the follow-on effects: increased support volume, delayed sales cycles, contract penalties, and engineering time diverted into incident response. When downtime coincides with a release, teams also lose confidence in their ability to ship safely, which slows future delivery.

For SaaS businesses, downtime directly conflicts with recurring revenue models. Customers expect continuous access, particularly when software underpins their own workflows. This is why many service agreements focus on availability guarantees. Even when penalties are not contractually enforced, repeated disruptions create friction that erodes long-term customer value. These dynamics are frequently surfaced when teams start tracking delivery outcomes through structured approaches such as those discussed in technology ROI measurement frameworks.

Larger organisations face a different but related problem. As products scale, deployment events affect more users, across more regions, with tighter regulatory and data protection requirements. Downtime in these environments can trigger compliance concerns or reputational damage that extends well beyond the technical incident itself. What begins as a deployment issue quickly becomes a leadership and governance problem.

A common misconception is that downtime is an unavoidable cost of progress. In reality, most downtime during releases is the result of avoidable design and process decisions. Tight coupling between components, non-backward-compatible database changes, and manual deployment steps all increase the likelihood that releases will interrupt service. These issues often emerge from architectural decisions made early, without revisiting them as the product grows. Patterns for addressing this evolution are explored in more depth in discussions on enterprise architecture design approaches.

It is also important to recognise that downtime risk increases as delivery frequency increases. Teams adopting faster release cycles without corresponding changes to deployment strategy often experience more frequent incidents, not fewer. This is where zero downtime deployment becomes strategically relevant. It allows organisations to move quickly without turning each release into a high-risk event.

From a leadership perspective, the question is no longer whether downtime can be eliminated entirely. The more practical question is how much risk the business is willing to accept during change. Zero downtime deployment reframes this conversation by treating releases as routine operational activities rather than exceptional disruptions.

Understanding downtime as a business risk, rather than a purely technical failure, creates the urgency needed to invest in better deployment practices. It also sets clear expectations for why zero downtime deployment matters, before examining the constraints and challenges that often stand in the way.

Technical and Organisational Constraints That Block Zero Downtime

Most teams understand the value of zero downtime deployment. Fewer are able to achieve it consistently. The gap is rarely caused by a lack of motivation. It is usually the result of accumulated technical and organisational constraints that make safe releases difficult to execute.

One of the most common blockers is legacy architecture. Applications designed around tightly coupled components or shared state assume that everything is deployed at once. In these systems, even small changes can require coordinated updates across services, databases, and clients. This makes parallel versions hard to run safely, which is a core requirement for zero downtime deployment.

Database design is often the most fragile point. Schema changes that are not backward compatible force teams into maintenance windows or risky, all-at-once migrations. Over time, these shortcuts become embedded in delivery habits. Teams learn to expect downtime during releases, rather than questioning the underlying assumptions that make it necessary.

Operational maturity also plays a significant role. Zero downtime deployment depends on reliable observability, predictable environments, and controlled release processes. Teams without clear monitoring, alerting, and rollback mechanisms are forced to be conservative. In these conditions, manual interventions become the norm, increasing both deployment time and failure risk.

From an organisational perspective, team structure can quietly undermine deployment goals. When development, operations, and security responsibilities are fragmented, no single group owns the end-to-end release outcome. Decisions are optimised locally rather than globally. This often results in deployment pipelines that technically function but are brittle under real-world conditions, a challenge frequently seen in smaller teams transitioning towards DevSecOps practices such as those discussed in practical DevSecOps approaches for small teams.

Another constraint is delivery pressure. Tight deadlines encourage teams to prioritise feature output over deployment safety. Temporary workarounds become permanent, and technical debt accumulates in the release process itself. Over time, this debt limits how often and how safely changes can be deployed. Addressing these issues requires recognising deployment reliability as a product capability, not just an engineering concern, a theme closely linked to managing long-term delivery health as outlined in technical debt management strategies.

Skills and experience gaps also matter. Zero downtime deployment techniques require familiarity with versioning strategies, traffic management, and failure isolation. Teams that have grown rapidly or inherited systems may lack shared knowledge in these areas. Without deliberate investment in learning and documentation, deployment practices remain inconsistent and fragile.

Finally, there is often a mismatch between ambition and reality. Leadership may expect zero downtime outcomes without allocating time or resources to redesign deployment pipelines, refactor critical components, or improve testing coverage. This disconnect creates frustration on both sides. Engineers feel pressure without support, while stakeholders see continued risk despite investment.

Recognising these constraints is not about assigning blame. It is about creating an honest baseline. Zero downtime deployment is achievable for most modern applications, but only when technical design, team structure, and delivery incentives are aligned. Understanding where constraints exist is the first step towards reducing release risk, which becomes essential when examining why deployments fail in production environments.

Failure Modes, Release Risk, and Why Most Deployments Break in Production

Production deployments rarely fail for a single reason. They break because multiple small risks align at the same moment. Understanding these failure modes is essential for designing zero downtime deployment rollback strategies that work under pressure, not just in theory.

One common failure point is assumption drift. Code is tested in environments that differ subtly from production, whether through configuration, data volume, or traffic patterns. When a release reaches real users, those differences surface quickly. Without isolation between versions, a single faulty assumption can interrupt service for everyone.

Another frequent cause is incomplete backward compatibility. Changes to APIs, data models, or authentication flows often assume that all consumers update simultaneously. In practice, clients lag behind, caches persist longer than expected, and background jobs run on older versions. These mismatches create hard-to-diagnose errors that only appear once traffic is live.

Release timing also introduces risk. Deployments during peak usage amplify the impact of any issue. Teams often choose these windows to minimise coordination overhead, but the trade-off is reduced room for recovery. When rollback requires database reversions or redeploying entire environments, even a small fault can escalate into visible downtime.

Human factors are just as important. Manual steps increase cognitive load and introduce variability. Under pressure, engineers may skip validation checks or misinterpret alerts. Without clear runbooks and rehearsed rollback paths, decision-making slows precisely when speed matters most. This is why zero downtime deployment best practices emphasise predictability and repeatability over heroic interventions.

Testing gaps are another major contributor. Functional tests may pass while performance or concurrency issues remain hidden. Load-dependent failures often surface only at scale, long after a deployment has completed. Teams that rely solely on pre-release testing without production feedback loops tend to discover issues too late, a pattern commonly observed in teams that underinvest in staged validation approaches such as those described in structured beta testing programmes.

Security and compliance changes can also trigger unexpected failures. Configuration updates, certificate rotations, or permission changes may behave differently across environments. When these changes are bundled with application releases, isolating the root cause becomes difficult. In regulated environments, this can extend recovery time due to approval or audit requirements, increasing the overall impact.

What differentiates resilient teams is not the absence of failures, but the ability to contain them. Effective rollback strategies assume that something will go wrong. They focus on restoring service quickly, even if the underlying issue remains unresolved. This requires deployments that are reversible, observable, and decoupled from irreversible changes.

From a risk management perspective, zero downtime deployment is about reducing blast radius. Instead of exposing all users to a new version at once, risk is introduced gradually and deliberately. Failures become signals rather than outages. This mindset aligns closely with resilience frameworks used in cloud-native environments, such as those outlined in Azure application resiliency guidance.

By examining why deployments fail in production, teams can move beyond reactive fixes. The next step is to explore deployment strategies and patterns that are explicitly designed to absorb these risks, rather than amplify them.

Core Zero Downtime Deployment Strategies and Patterns Explained

Zero downtime deployment is achieved through a set of repeatable strategies rather than a single approach. Each strategy addresses risk in a different way, and none is universally correct. The effectiveness of a zero downtime deployment strategy depends on system architecture, team maturity, and the type of change being released.

One of the most widely discussed patterns is blue green deployment. In this model, two production environments run in parallel. One serves live traffic, while the other hosts the new release. Traffic is switched only when the new version is verified. This approach reduces risk by making rollback straightforward, but it doubles infrastructure requirements and assumes strong environment parity. It also works best when database changes are minimal or backward compatible, which is not always the case in evolving systems.

Rolling updates take a different approach. Instead of switching environments, instances are updated incrementally. At any given moment, both old and new versions handle traffic. This pattern is common in containerised environments and reduces infrastructure overhead. However, it requires careful version compatibility and robust health checks. Without these, rolling updates can introduce subtle inconsistencies that are harder to detect than full outages. This is where the comparison between zero downtime vs rolling update becomes important, as rolling updates do not guarantee zero downtime unless implemented with additional safeguards.

Canary deployments focus on risk isolation. A small subset of users is exposed to the new version first, while the majority remain on the stable release. Metrics and user behaviour are monitored closely before wider rollout. This strategy is particularly effective for user-facing features and performance-sensitive changes. It does, however, require mature observability and clear success criteria. Without reliable signals, teams may either promote risky releases too quickly or delay unnecessarily.

Feature toggles offer a complementary technique rather than a standalone strategy. By decoupling deployment from release, teams can ship code safely without activating it immediately. This reduces pressure on deployment windows and allows rapid rollback by disabling features rather than redeploying code. Feature toggles add operational complexity and must be managed carefully to avoid long-term configuration sprawl.

More advanced zero downtime deployment patterns emerge in distributed systems. Shadow traffic, where requests are duplicated to a new version without affecting responses, allows teams to validate behaviour under real load. Contract testing between services ensures compatibility during mixed-version operation. These techniques are often associated with microservices architectures, but they can be applied selectively rather than wholesale, as discussed in architectural evaluations such as those outlined in microservices versus serverless trade-offs.

Choosing between these strategies is less about technical preference and more about constraint management. Blue green deployments suit simpler systems with clear boundaries. Rolling updates align well with horizontally scalable services. Canary releases work best when metrics are trusted and response time matters. In practice, mature teams combine multiple zero downtime deployment techniques rather than relying on one pattern exclusively.

It is also important to avoid false equivalence. Zero downtime is not automatically achieved by adopting a named pattern. Without backward-compatible changes, reliable health checks, and controlled traffic routing, these strategies degrade into risky deployments with more moving parts. Architecture decisions, such as service boundaries and dependency management, heavily influence which patterns are viable, a theme explored further in enterprise architecture design patterns.

Understanding these strategies provides a framework for decision-making. The next step is examining how these patterns are implemented in real delivery environments through pipelines, automation, and governance mechanisms that turn strategy into execution.

Deployment Frameworks, CI/CD Pipelines, and Automation in Practice

Zero downtime deployment strategies only become reliable when they are embedded into delivery frameworks and automated pipelines. Without this integration, even well-designed deployment patterns degrade under time pressure or team turnover. The goal of a zero downtime deployment pipeline is not speed alone, but repeatability and controlled risk.

At a practical level, CI/CD pipelines provide the structure needed to enforce consistency. Automated builds, tests, and deployment steps reduce manual intervention, which is one of the most common sources of release failure. More importantly, pipelines make deployment behaviour explicit. Each stage represents a decision point where quality and safety checks can be applied before changes reach users.

For zero downtime deployment, the pipeline must support parallelism. This includes the ability to deploy new versions alongside existing ones, route traffic selectively, and validate system health continuously. Pipelines that only support stop-and-replace deployments cannot deliver zero downtime outcomes, regardless of how fast they run. This is why pipeline design is as critical as application code.

Automation plays a dual role. First, it removes variability. Tasks such as infrastructure provisioning, configuration management, and secret handling should behave the same way every time. Second, it enables faster feedback. Automated health checks, smoke tests, and monitoring integrations surface problems early, when rollback is still low cost. Without automation, teams often discover issues only after users report them.

CI/CD pipelines also act as governance mechanisms. They encode deployment policies that would otherwise rely on tribal knowledge. For example, enforcing backward-compatible database migrations or requiring approval for production traffic shifts helps align engineering decisions with organisational risk tolerance. These controls are particularly important for small and mid-sized teams, where individuals often carry multiple responsibilities, a challenge explored in practical DevSecOps models for lean teams.

Tooling choices matter, but they should follow principles rather than drive them. Most modern CI/CD platforms can support zero downtime deployment automation when configured correctly. The differentiator is how pipelines are structured, not which vendor is selected. Pipelines that tightly couple build, test, and release stages limit flexibility. In contrast, modular pipelines allow teams to pause, observe, and promote changes gradually.

Another critical aspect is environment parity. Zero downtime deployment assumes that behaviour observed in pre-production environments is representative of production. Infrastructure-as-code and configuration automation reduce drift between environments, making pipeline outcomes more trustworthy. This becomes increasingly important in hybrid and multi-cloud setups, where inconsistency can silently undermine deployment safety, as discussed in hybrid cloud delivery strategies.

Automation also supports rollback discipline. Pipelines should make reverting a change as routine as deploying it. This includes versioned artifacts, reversible configuration changes, and clear rollback triggers. When rollback paths are automated and tested, teams are more willing to deploy frequently, knowing that recovery is predictable.

In practice, zero downtime deployment pipelines evolve incrementally. Teams rarely design them perfectly from the start. Instead, they identify high-risk steps and automate those first, gradually extending coverage. The key is intentionality. Pipelines should reflect how the system is meant to fail safely, not just how it is meant to succeed.

With strategy and automation in place, the remaining challenge is execution across real-world environments. Cloud platforms, Kubernetes clusters, and microservices architectures each introduce specific considerations that shape how zero downtime deployment is applied in practice.

Executing Zero Downtime Deployment Across Cloud, Kubernetes, and Microservices

Execution is where zero downtime deployment shifts from design intent to operational reality. Cloud platforms, Kubernetes, and microservices architectures all promise flexibility, but they also introduce new layers of complexity that shape how zero downtime deployment is actually achieved.

In cloud environments, elasticity is both an advantage and a risk. The ability to scale infrastructure on demand makes parallel deployments feasible, but it can also mask inefficient release practices. Teams may rely on overprovisioning rather than disciplined rollout control. Zero downtime deployment on cloud platforms requires deliberate traffic management, clear environment boundaries, and strong observability, not just automated scaling.

Kubernetes has become a common foundation for zero downtime deployment, largely because it natively supports rolling updates, health checks, and declarative configuration. However, these features are often misunderstood. A default rolling update does not guarantee zero downtime. If readiness probes are poorly defined or resource limits are misconfigured, Kubernetes may route traffic to unhealthy pods. Zero downtime deployment in Kubernetes depends on treating these primitives as safety mechanisms rather than configuration afterthoughts.

State management remains one of the hardest challenges. Stateless services align well with zero downtime deployment patterns, but most real systems include shared databases, message queues, or caches. Schema evolution, connection handling, and data consistency must be addressed explicitly. In microservices environments, this often requires versioned APIs and consumer-driven contracts to allow multiple service versions to operate concurrently without conflict.

Microservices architectures can either enable or obstruct zero downtime deployment. When services are loosely coupled and independently deployable, risk is naturally isolated. When dependencies are implicit or poorly documented, deployments become fragile. Teams that adopt microservices without investing in service boundaries and communication standards often experience more deployment incidents, not fewer. These trade-offs are explored in architectural evaluations such as those discussed in scalable API design for SaaS platforms.

Cloud provider specifics also influence execution. Managed load balancers, traffic routing rules, and deployment services vary across platforms. While the underlying principles remain consistent, implementation details differ. Zero downtime deployment on AWS may emphasise traffic shifting and health-based routing, while Azure environments often integrate deployment logic closely with platform-native monitoring. The risk lies in becoming dependent on platform-specific behaviour without understanding its failure modes.

Observability is the common denominator across all environments. Metrics, logs, and traces provide the signals needed to decide whether a deployment is safe to continue or should be halted. Without reliable observability, advanced deployment techniques such as canary releases or progressive rollouts lose their effectiveness. Execution becomes guesswork rather than controlled experimentation.

Another often overlooked factor is operational ownership. As systems grow more distributed, responsibility for deployment outcomes can become blurred. Clear ownership of services, pipelines, and on-call response is essential. Zero downtime deployment requires fast decision-making during releases. Ambiguity slows response and increases impact when issues arise.

Ultimately, executing zero downtime deployment across cloud, Kubernetes, and microservices is less about mastering every platform feature and more about consistency. The same principles apply across environments: gradual exposure, strong health signals, reversible changes, and disciplined rollback. Teams that internalise these principles can adapt their execution as platforms evolve.

With execution patterns understood, the final step is stepping back to consider zero downtime deployment as an organisational capability. Sustaining it over time requires strategic alignment, investment choices, and leadership support beyond individual releases.

Building a Sustainable Zero Downtime Deployment Capability

Zero downtime deployment only delivers long-term value when it is treated as an organisational capability rather than a one-off technical achievement. Teams that succeed in this area shift their focus from individual releases to the systems, behaviours, and decisions that make safe change routine.

The first step is reframing how deployment success is measured. Output metrics such as release frequency or deployment duration matter, but they are incomplete. Sustainable zero downtime deployment prioritises outcome metrics such as user impact, recovery time, and confidence in rollback. These signals encourage teams to design for resilience instead of speed alone.

Leadership alignment is critical. Zero downtime deployment best practices often require short-term investment in refactoring, automation, and observability. These efforts may not produce immediate feature gains, but they reduce delivery risk over time. When leadership understands this trade-off, teams are given the space to improve deployment foundations without constant delivery pressure.

Process discipline plays an equally important role. Clear release criteria, versioning standards, and compatibility rules create shared expectations across teams. This reduces ambiguity during deployments and makes decision-making faster when issues arise. Over time, these practices become institutional knowledge rather than individual expertise.

People and skills development should not be overlooked. Zero downtime deployment relies on engineers who understand system behaviour under change, not just how to write code. Investing in shared learning, post-incident reviews, and cross-functional collaboration helps teams build this intuition. The result is fewer surprises and faster responses when deployments do not go as planned.

Technology choices should support, not dictate, deployment strategy. Tools and platforms evolve quickly, but the principles behind zero downtime deployment remain stable. Organisations that anchor their approach in principles can adapt pipelines, cloud providers, or orchestration platforms without resetting their delivery model. This flexibility becomes especially valuable as systems scale or business priorities shift.

Governance also needs to mature alongside deployment capability. As products grow, so do regulatory, security, and availability expectations. Zero downtime deployment provides a foundation for meeting these demands, but only when deployment controls are visible and auditable. This is often where external reviews or technical due diligence exercises become useful, such as those described in technical due diligence for growing technology companies.

From a strategic perspective, zero downtime deployment supports long-term product health. It enables faster experimentation without amplifying risk, improves customer trust, and reduces operational drag on engineering teams. Over time, it becomes a competitive advantage that is difficult to replicate without similar investment and discipline.

For organisations assessing where they stand today, the most productive starting point is an honest evaluation of current deployment practices, constraints, and risk tolerance. This kind of assessment often sits alongside broader delivery and architecture discussions, similar to those explored during structured technology consultations such as those outlined on the EmporionSoft consultation page or within the company’s broader approach to building resilient delivery teams on the EmporionSoft team overview.

Zero downtime deployment is not an endpoint. It is an ongoing capability that evolves with the product, the organisation, and the expectations of users. Teams that invest in it deliberately are better positioned to scale change without disruption, even as complexity increases.